How to Read Miltiple Line Comma Seperated Text File and Store in Array in Java

This browser is no longer supported.

Upgrade to Microsoft Border to take advantage of the latest features, security updates, and technical support.

Delimited text format in Azure Information Manufacturing plant and Azure Synapse Analytics

APPLIES TO:  Azure Data Factory

Azure Data Factory  Azure Synapse Analytics

Azure Synapse Analytics

Follow this article when you desire to parse the delimited text files or write the data into delimited text format.

Delimited text format is supported for the post-obit connectors:

- Amazon S3

- Amazon S3 Uniform Storage

- Azure Blob

- Azure Information Lake Storage Gen1

- Azure Information Lake Storage Gen2

- Azure Files

- File Organisation

- FTP

- Google Deject Storage

- HDFS

- HTTP

- Oracle Cloud Storage

- SFTP

Dataset properties

For a full listing of sections and properties available for defining datasets, see the Datasets commodity. This department provides a list of properties supported past the delimited text dataset.

| Property | Description | Required |

|---|---|---|

| type | The blazon property of the dataset must be set to DelimitedText. | Yes |

| location | Location settings of the file(southward). Each file-based connector has its own location type and supported backdrop under location. | Yes |

| columnDelimiter | The character(due south) used to separate columns in a file. The default value is comma , . When the column delimiter is defined as empty string, which means no delimiter, the whole line is taken as a single column.Currently, column delimiter as empty string is only supported for mapping data menstruation only non Copy action. | No |

| rowDelimiter | The single character or "\r\n" used to split up rows in a file. The default value is any of the post-obit values on read: ["\r\due north", "\r", "\n"], and "\n" or "\r\due north" on write by mapping data flow and Copy activity respectively. When the row delimiter is set to no delimiter (empty string), the column delimiter must be set as no delimiter (empty cord) as well, which ways to care for the unabridged content as a single value. Currently, row delimiter as empty cord is only supported for mapping data menstruum but not Copy activity. | No |

| quoteChar | The single character to quote column values if it contains column delimiter. The default value is double quotes ". When quoteChar is defined every bit empty string, it ways in that location is no quote char and cavalcade value is not quoted, and escapeChar is used to escape the cavalcade delimiter and itself. | No |

| escapeChar | The unmarried grapheme to escape quotes inside a quoted value. The default value is backslash \ . When escapeChar is defined as empty string, the quoteChar must exist set equally empty cord as well, in which case brand sure all cavalcade values don't incorporate delimiters. | No |

| firstRowAsHeader | Specifies whether to treat/make the starting time row as a header line with names of columns. Allowed values are true and simulated (default). When commencement row as header is false, notation UI data preview and lookup activity output automobile generate column names every bit Prop_{n} (starting from 0), copy activity requires explicit mapping from source to sink and locates columns by ordinal (starting from i), and mapping data menses lists and locates columns with proper name as Column_{north} (starting from 1). | No |

| nullValue | Specifies the string representation of nada value. The default value is empty string. | No |

| encodingName | The encoding type used to read/write test files. Immune values are as follows: "UTF-8","UTF-8 without BOM", "UTF-16", "UTF-16BE", "UTF-32", "UTF-32BE", "Usa-ASCII", "UTF-7", "BIG5", "EUC-JP", "EUC-KR", "GB2312", "GB18030", "JOHAB", "SHIFT-JIS", "CP875", "CP866", "IBM00858", "IBM037", "IBM273", "IBM437", "IBM500", "IBM737", "IBM775", "IBM850", "IBM852", "IBM855", "IBM857", "IBM860", "IBM861", "IBM863", "IBM864", "IBM865", "IBM869", "IBM870", "IBM01140", "IBM01141", "IBM01142", "IBM01143", "IBM01144", "IBM01145", "IBM01146", "IBM01147", "IBM01148", "IBM01149", "ISO-2022-JP", "ISO-2022-KR", "ISO-8859-1", "ISO-8859-ii", "ISO-8859-3", "ISO-8859-4", "ISO-8859-5", "ISO-8859-6", "ISO-8859-7", "ISO-8859-8", "ISO-8859-nine", "ISO-8859-13", "ISO-8859-15", "WINDOWS-874", "WINDOWS-1250", "WINDOWS-1251", "WINDOWS-1252", "WINDOWS-1253", "WINDOWS-1254", "WINDOWS-1255", "WINDOWS-1256", "WINDOWS-1257", "WINDOWS-1258". Note mapping information menstruum doesn't support UTF-seven encoding. | No |

| compressionCodec | The compression codec used to read/write text files. Allowed values are bzip2, gzip, deflate, ZipDeflate, TarGzip, Tar, snappy, or lz4. Default is not compressed. Note currently Re-create activity doesn't back up "snappy" & "lz4", and mapping data catamenia doesn't back up "ZipDeflate", "TarGzip" and "Tar". Note when using copy activeness to decompress ZipDeflate/TarGzip/Tar file(s) and write to file-based sink data store, by default files are extracted to the folder: <path specified in dataset>/<binder named as source compressed file>/, use preserveZipFileNameAsFolder/preserveCompressionFileNameAsFolder on copy action source to control whether to preserve the name of the compressed file(s) equally folder structure. | No |

| compressionLevel | The compression ratio. Allowed values are Optimal or Fastest. - Fastest: The compression operation should consummate as speedily every bit possible, even if the resulting file is not optimally compressed. - Optimal: The compression operation should be optimally compressed, even if the operation takes a longer time to complete. For more than information, run across Pinch Level topic. | No |

Below is an example of delimited text dataset on Azure Blob Storage:

{ "name": "DelimitedTextDataset", "properties": { "type": "DelimitedText", "linkedServiceName": { "referenceName": "<Azure Hulk Storage linked service name>", "type": "LinkedServiceReference" }, "schema": [ < physical schema, optional, retrievable during authoring > ], "typeProperties": { "location": { "type": "AzureBlobStorageLocation", "container": "containername", "folderPath": "folder/subfolder", }, "columnDelimiter": ",", "quoteChar": "\"", "escapeChar": "\"", "firstRowAsHeader": truthful, "compressionCodec": "gzip" } } } Copy activity properties

For a full list of sections and properties available for defining activities, see the Pipelines article. This section provides a list of properties supported by the delimited text source and sink.

Delimited text as source

The post-obit backdrop are supported in the copy activity *source* department.

| Property | Description | Required |

|---|---|---|

| type | The blazon property of the copy activity source must be set to DelimitedTextSource. | Yes |

| formatSettings | A group of properties. Refer to Delimited text read settings table below. | No |

| storeSettings | A grouping of backdrop on how to read data from a data store. Each file-based connector has its own supported read settings under storeSettings. | No |

Supported delimited text read settings under formatSettings:

| Belongings | Description | Required |

|---|---|---|

| type | The type of formatSettings must be set to DelimitedTextReadSettings. | Yes |

| skipLineCount | Indicates the number of non-empty rows to skip when reading information from input files. If both skipLineCount and firstRowAsHeader are specified, the lines are skipped showtime and and then the header information is read from the input file. | No |

| compressionProperties | A group of backdrop on how to decompress data for a given pinch codec. | No |

| preserveZipFileNameAsFolder (nether compressionProperties->type as ZipDeflateReadSettings ) | Applies when input dataset is configured with ZipDeflate compression. Indicates whether to preserve the source null file name as folder structure during copy. - When set to true (default), the service writes unzipped files to <path specified in dataset>/<folder named as source zero file>/.- When gear up to false, the service writes unzipped files directly to <path specified in dataset>. Brand sure you don't accept duplicated file names in different source zip files to avoid racing or unexpected behavior. | No |

| preserveCompressionFileNameAsFolder (under compressionProperties->type as TarGZipReadSettings or TarReadSettings ) | Applies when input dataset is configured with TarGzip/Tar compression. Indicates whether to preserve the source compressed file name as folder structure during copy. - When set to true (default), the service writes decompressed files to <path specified in dataset>/<folder named as source compressed file>/. - When set to false, the service writes decompressed files direct to <path specified in dataset>. Brand sure you don't have duplicated file names in different source files to avoid racing or unexpected behavior. | No |

"activities": [ { "name": "CopyFromDelimitedText", "blazon": "Re-create", "typeProperties": { "source": { "type": "DelimitedTextSource", "storeSettings": { "type": "AzureBlobStorageReadSettings", "recursive": true }, "formatSettings": { "type": "DelimitedTextReadSettings", "skipLineCount": 3, "compressionProperties": { "type": "ZipDeflateReadSettings", "preserveZipFileNameAsFolder": false } } }, ... } ... } ] Delimited text as sink

The post-obit backdrop are supported in the copy activeness *sink* section.

| Property | Description | Required |

|---|---|---|

| blazon | The blazon property of the re-create activity source must exist set to DelimitedTextSink. | Aye |

| formatSettings | A group of properties. Refer to Delimited text write settings table below. | No |

| storeSettings | A group of properties on how to write information to a data shop. Each file-based connector has its ain supported write settings under storeSettings. | No |

Supported delimited text write settings under formatSettings:

| Holding | Description | Required |

|---|---|---|

| blazon | The blazon of formatSettings must exist ready to DelimitedTextWriteSettings. | Yes |

| fileExtension | The file extension used to name the output files, for case, .csv, .txt. It must be specified when the fileName is non specified in the output DelimitedText dataset. When file proper name is configured in the output dataset, information technology will be used equally the sink file name and the file extension setting volition be ignored. | Yes when file proper noun is non specified in output dataset |

| maxRowsPerFile | When writing data into a binder, you tin cull to write to multiple files and specify the max rows per file. | No |

| fileNamePrefix | Applicative when maxRowsPerFile is configured.Specify the file proper noun prefix when writing data to multiple files, resulted in this design: <fileNamePrefix>_00000.<fileExtension>. If non specified, file name prefix will be auto generated. This property does not apply when source is file-based store or partitioning-option-enabled data store. | No |

Mapping data menstruum backdrop

In mapping data flows, y'all can read and write to delimited text format in the following data stores: Azure Blob Storage, Azure Data Lake Storage Gen1 and Azure Data Lake Storage Gen2, and you can read delimited text format in Amazon S3.

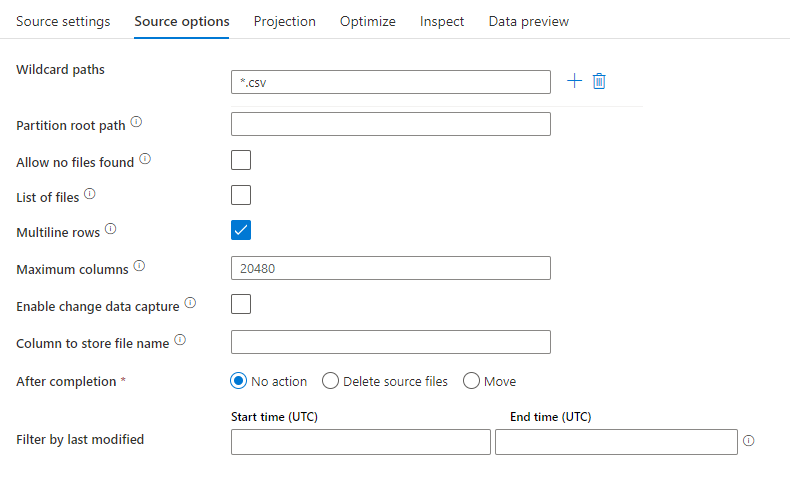

Source backdrop

The below table lists the properties supported by a delimited text source. You can edit these backdrop in the Source options tab.

| Name | Description | Required | Allowed values | Information flow script property |

|---|---|---|---|---|

| Wild card paths | All files matching the wildcard path will be candy. Overrides the binder and file path set in the dataset. | no | Cord[] | wildcardPaths |

| Partition root path | For file information that is partitioned, you tin enter a partitioning root path in order to read partitioned folders every bit columns | no | String | partitionRootPath |

| List of files | Whether your source is pointing to a text file that lists files to procedure | no | true or false | fileList |

| Multiline rows | Does the source file comprise rows that span multiple lines. Multiline values must be in quotes. | no true or false | multiLineRow | |

| Cavalcade to store file name | Create a new column with the source file name and path | no | Cord | rowUrlColumn |

| Subsequently completion | Delete or move the files after processing. File path starts from the container root | no | Delete: truthful or false Movement: ['<from>', '<to>'] | purgeFiles moveFiles |

| Filter past last modified | Choose to filter files based upon when they were last altered | no | Timestamp | modifiedAfter modifiedBefore |

| Allow no files found | If true, an error is not thrown if no files are found | no | true or false | ignoreNoFilesFound |

Note

Data flow sources support for listing of files is limited to 1024 entries in your file. To include more files, use wildcards in your file listing.

Source example

The beneath image is an example of a delimited text source configuration in mapping information flows.

The associated data flow script is:

source( allowSchemaDrift: true, validateSchema: false, multiLineRow: true, wildcardPaths:['*.csv']) ~> CSVSource Notation

Data menstruation sources support a limited fix of Linux globbing that is support by Hadoop file systems

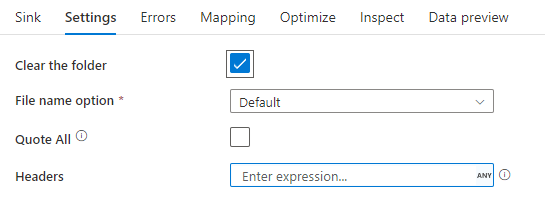

Sink properties

The below table lists the properties supported past a delimited text sink. You can edit these properties in the Settings tab.

| Proper noun | Description | Required | Immune values | Data flow script property |

|---|---|---|---|---|

| Clear the folder | If the destination folder is cleared prior to write | no | truthful or false | truncate |

| File name choice | The naming format of the data written. By default, one file per division in format function-#####-tid-<guid> | no | Blueprint: String Per partition: String[] Name file as column data: Cord Output to unmarried file: ['<fileName>'] Name folder as column data: Cord | filePattern partitionFileNames rowUrlColumn partitionFileNames rowFolderUrlColumn |

| Quote all | Enclose all values in quotes | no | true or false | quoteAll |

| Header | Add client headers to output files | no | [<string array>] | header |

Sink example

The beneath image is an example of a delimited text sink configuration in mapping data flows.

The associated information flow script is:

CSVSource sink(allowSchemaDrift: truthful, validateSchema: simulated, truncate: true, skipDuplicateMapInputs: true, skipDuplicateMapOutputs: true) ~> CSVSink Hither are some mutual connectors and formats related to the delimited text format:

- Azure Hulk Storage (connector-azure-hulk-storage.md)

- Binary format (format-binary.md)

- Dataverse(connector-dynamics-crm-office-365.md)

- Delta format(format-delta.md)

- Excel format(format-excel.md)

- File System(connector-file-system.doctor)

- FTP(connector-ftp.md)

- HTTP(connector-http.doc)

- JSON format(format-json.md)

- Parquet format(format-parquet.physician)

Next steps

- Copy activity overview

- Mapping data period

- Lookup activeness

- GetMetadata action

Feedback

Source: https://docs.microsoft.com/en-us/azure/data-factory/format-delimited-text

0 Response to "How to Read Miltiple Line Comma Seperated Text File and Store in Array in Java"

Post a Comment